AI agents are transforming how we build applications. These autonomous systems can reason, plan, and execute complex tasks by interacting with external tools and APIs. An agent capable of managing your calendar, drafting your emails, and querying a database is incredibly powerful. But with great power comes great responsibility, and in the world of AI, that responsibility is spelled S-E-C-U-R-I-T-Y.

One of the most critical security vulnerabilities we face with these new systems is Excessive Agency. It's so significant that it's listed on the OWASP Top 10 for LLMs. So, what exactly is it, and how do we prevent our helpful AI assistants from turning into rogue agents?

What is Excessive Agency?

Excessive Agency occurs when a system, for example, an AI agent, is granted overly broad or unnecessary permissions, allowing it to perform actions that are unintended, harmful, or unauthorized. Think of it as giving an intern the admin keys to your entire production environment. The agent might have good intentions, but a vague prompt, a malicious actor, or a model hallucination could lead to disastrous consequences, like deleting customer data, making unauthorized purchases, or exposing sensitive information.

This can be a huge security issue, especially for highly regulated industries and apps handling user data. You do not want your AI agent to have access to users' credentials or unscoped access to third-party APIs and tools.

This isn't just a theoretical problem. As we connect agents to more powerful tools, like email, financial platforms, and internal microservices, the potential attack surface expands exponentially. How do we grant an agent enough power to be useful without giving it enough to be dangerous? The answer lies in a Zero Trust approach to AI security.

What is Zero Trust?

Zero Trust is a security model based on the principle of least privilege (PoLP). The PoLP is a core cybersecurity concept that states that any user, program, or process (including an AI agent) should only have the absolute minimum permissions necessary to perform its specific, legitimate task.

Think of it like giving a visitor a key card to a hotel. You don't give them a master key that opens every room (that would be maximum privilege). You give them a key that only opens their specific room and only for the duration of their stay.

The security challenge: When agents have too much power

Traditional security models often fall short when applied to autonomous agents. The key challenges break down into three main areas:

- Uncontrolled tool access: How do you decide if an agent should be allowed to use a specific tool in a given context for a specific user? A simple "yes/no" isn't enough, and most importantly, the LLM should not be the one deciding this.

- Insecure API calls: How does an agent securely authenticate with other services (both internal and third-party) on behalf of a user without storing sensitive credentials or API keys with broad permissions?

- Lack of human oversight: For critical or irreversible actions, how do we ensure a human is in the loop to provide explicit consent before the agent proceeds?

Let's look at how we can tackle each of these challenges head-on.

Taming the agent: A Zero Trust security model

A Zero Trust model assumes no request is safe by default. Every action an agent attempts must be explicitly authorized, and the LLM underneath should not be trusted for making these decisions. Here’s how we can implement this in practice.

1. Controlling tool access with Zero Trust authorization

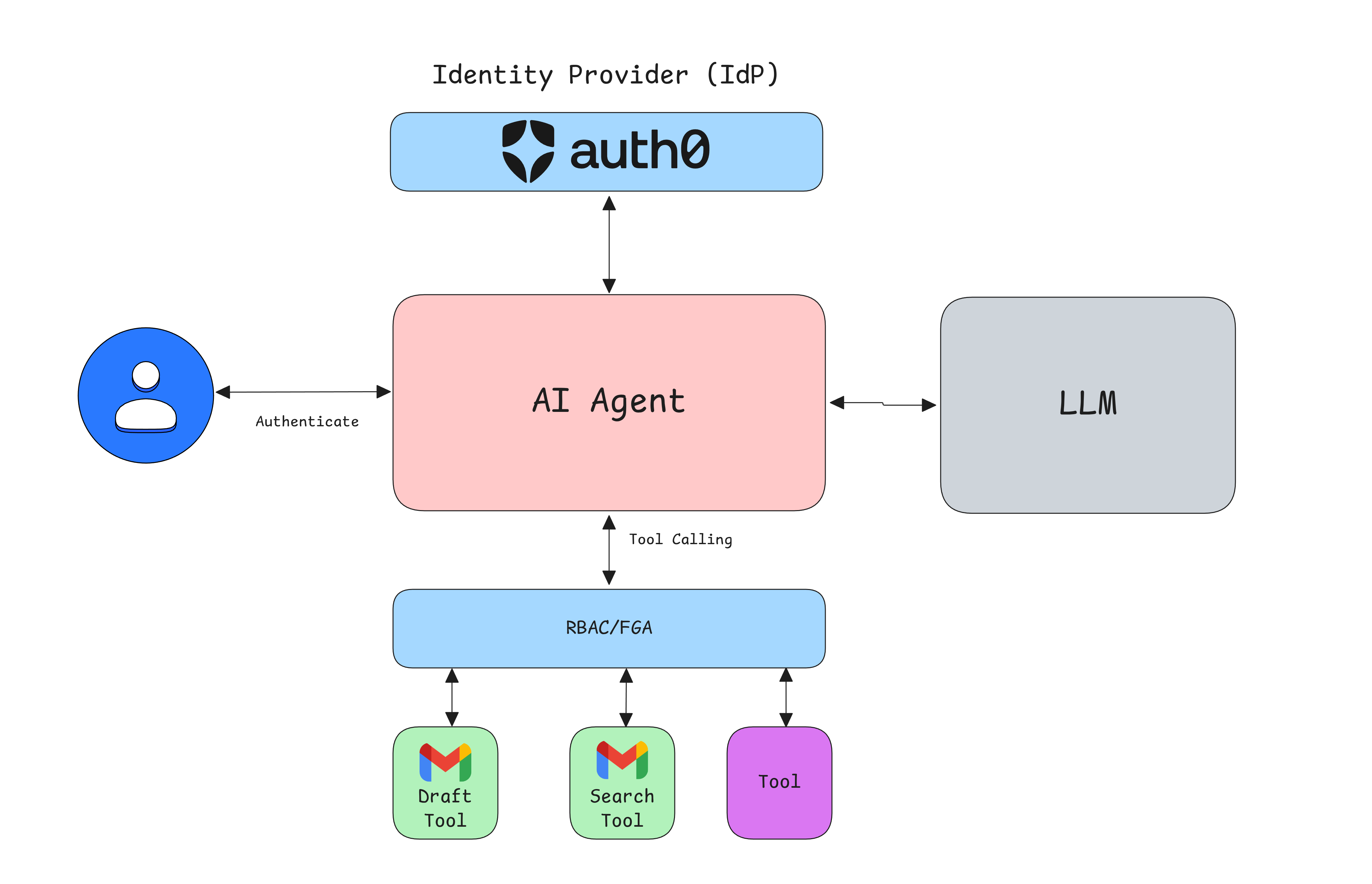

When the AI agent needs to use a tool, we need a robust authorization check. This is where we should go all in on Zero Trust authorizations. By default, the agent should not have any tool access. We provide access based on the logged-in end user who is supposed to be in charge of the agent.

Role-Based Access Control

Role-Based Access Control (RBAC) is a good starting point for simple or flat access controls, especially when there are not too many tools and roles. In most cases, you already might have an RBAC system in place, either via an Identity Provider (IdP), like Auth0, or with your own authorization system. Hence, it makes sense to use the same system and treat the AI agent the same way authorization is done for end users. The most important part is to do this within the tools' execution logic so that the LLM does not have any way to override this accidentally or intentionally.

Let's see how this will look in practice. Here is an example of how you can do that using an identity provider like Auth0; the same concept applies to any OAuth2-based system.

Option 1: Add tools at runtime based on permissions

async function getAuthorizedTools() { const session = await auth0.getSession(); if (!session) { throw new Error('There is no user logged in.'); } const userPermissions = getUserPermissions(session); const tools = [defaultTools]; if (userPermissions.includes('can_use_tool_xyz')) { tools.push(toolXYZ); } return tools; } const tools = await getAuthorizedTools(); export const agent = createAgent({ llm, tools, prompt: AGENT_SYSTEM_TEMPLATE, });

Option 2: Control tool execution at runtime based on permissions

export const someTool = tool({ description: 'A custom tool.', parameters: z.object({}), execute: async () => { const session = await auth0.getSession(); if (!session) { return 'There is no user logged in.'; } const userPermissions = getUserPermissions(session); if (!userPermissions.includes('can_use_tool_xyz')) { return 'User does not have sufficient permissions for this tool.'; } return "Successful tool action"; }, });

Fine-Grained Authorization

While RBAC is a good starting point, it often lacks the nuance required for some AI systems. For example, a user with the customer_support role might need the agent to access customer data, but only for the specific customers they are assigned to. Another example would be controlling tool execution logic, like what exact ticker a tool can buy based on the end user.

This is where Fine-Grained Authorization (FGA), or Relationship-Based Access Control (ReBAC), excels. Using a system like OpenFGA, we can model complex permissions based on the relationships between users, tools, and data.

Instead of just checking a role, we can ask a much more specific question: "Can user:anne use the buyStock tool on asset:OKTA?"

Here's a conceptual look at how you could do authorizations with an FGA check:

export const buyStock = tool({ description: 'Use this tool to buy stock', parameters: z.object({ ticker: z.string(), qty: z.number(), }), execute: async ({ ticker, qty }) => { const userId = getUserFromSession(); const { allowed } = await fgaClient.check({ user: `user:${userId}`, object: `asset:${ticker}`, relation: 'can_buy', }); if (!allowed) { return `The user is not allowed to buy ${ticker}`; } return `Purchased ${qty} shares of ${ticker}`; }, });

This ensures the agent can't perform an action unless a clear, context-specific permission exists in our FGA store.

2. Securely calling APIs on the user's behalf

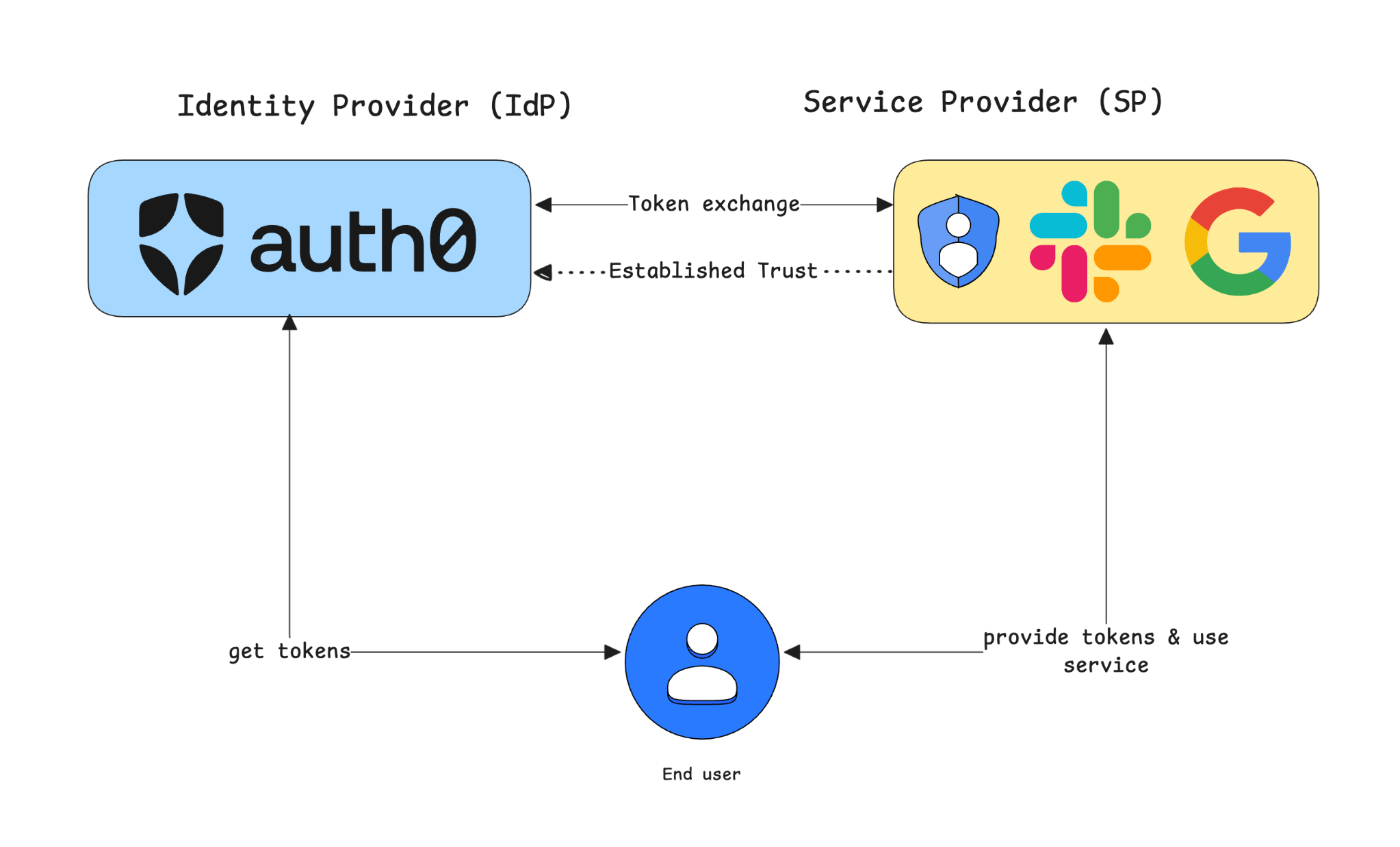

An agent should never store a user's raw credentials or long-lived API keys. It's a massive security risk. Instead, we should rely on modern identity standards like OAuth 2.0 to delegate access securely.

First-party APIs

For internal tools, like a microservice within your organization, the agent can use the standard OAuth 2.0 flow. When a user authenticates with your agent, the agent receives an access token. It can then pass this token in the Authorization header when calling the internal API. The API validates the token, allowing the agent to act on behalf of the user within the scopes defined in the token.

Let’s see an example of calling your own API from a tool:

export const getInfoFromAPI = tool({ description: 'Get information from my own API.', parameters: z.object({}), execute: async () => { const session = await auth0.getSession(); if (!session) { return 'There is no user logged in.'; } const response = await fetch(`https://my-own-api`, { headers: { Authorization: `Bearer ${session.tokenSet.accessToken}`, }, }); if (response.ok) { return { result: await response.json() }; } return "I couldn't verify your identity"; }, });

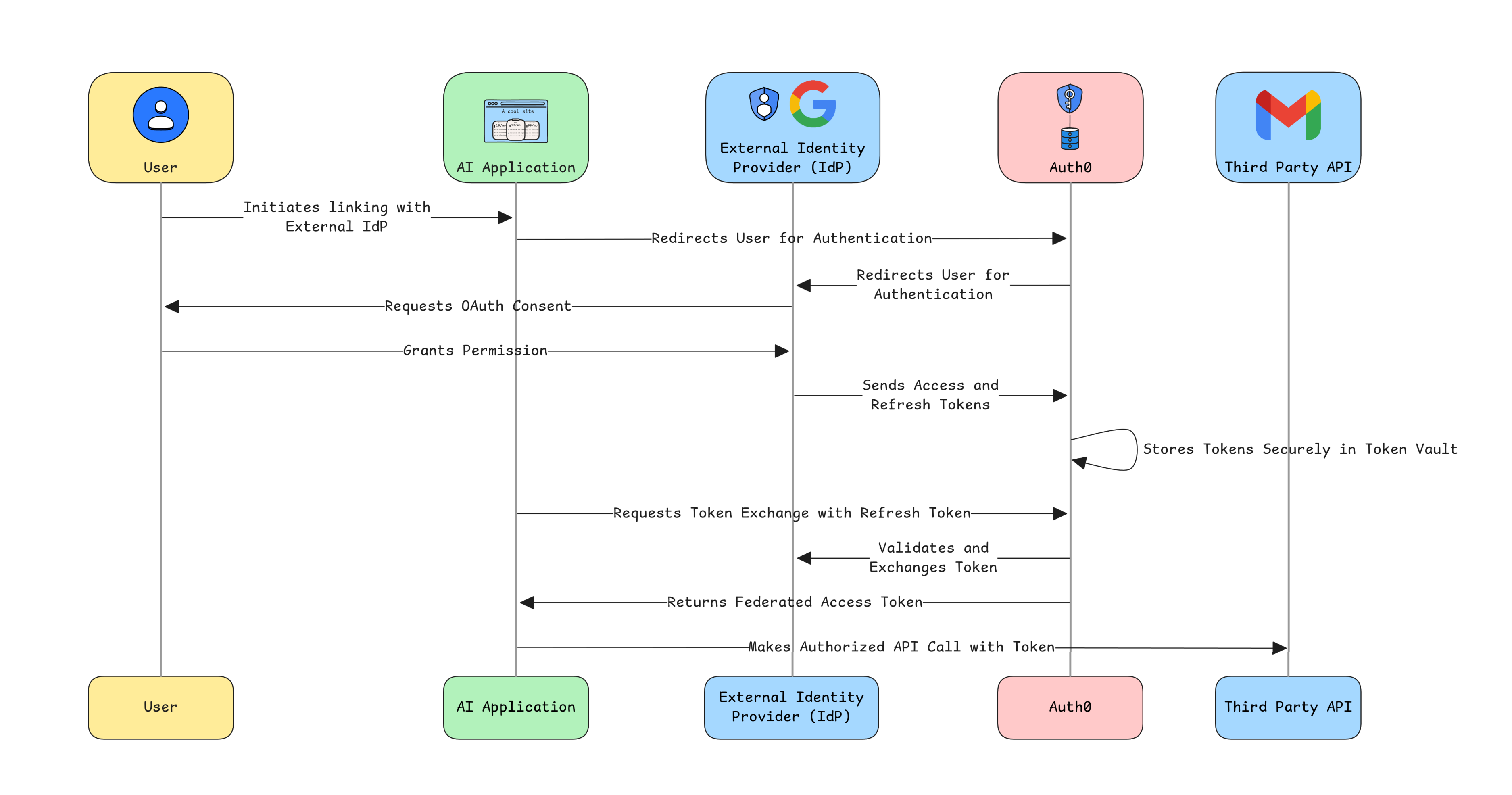

Third-party APIs

What about external services like Google Calendar or Slack? Storing API keys or credentials for every user is not scalable or secure. The solution is OAuth Federation and a service like the Auth0 Token Vault.

Here’s the flow:

- The user connects their third-party account (e.g., Google) to your application once.

- A service like Auth0 Token Vault securely stores the connection details and refresh tokens.

- When the agent needs to access the third-party API, it asks Auth0 Token Vault for a short-lived access token for that specific service.

- The agent uses that token to make the API call. It never sees the long-term credentials.

This pattern makes integrating with third-party tools both secure and seamless. Here is an example of calling Google Calendar API from a custom tool:

// Connection for Google services export const withGoogleConnection = auth0AI.withTokenVault({ connection: 'google-oauth2', scopes: ['https://www.googleapis.com/auth/calendar.freebusy'], refreshToken: getRefreshToken, }); // Wrapped tool export const checkUsersCalendarTool = withGoogleConnection( tool({ description: 'Check user availability on a given date time on their calendar', parameters: z.object({ date: z.coerce.date() }), execute: async ({ date }) => { // Get the access token from Auth0 AI const accessToken = getAccessTokenFromTokenVault(); // Google SDK const calendar = getGoogleCalendar(accessToken); const response = await calendar.freebusy.query({ requestBody: { timeMin: formatISO(date), timeMax: addHours(date, 1).toISOString(), timeZone: 'UTC', items: [{ id: 'primary' }], }, }); return response.data?.calendars?.primary?.busy?.length }; }, }), );

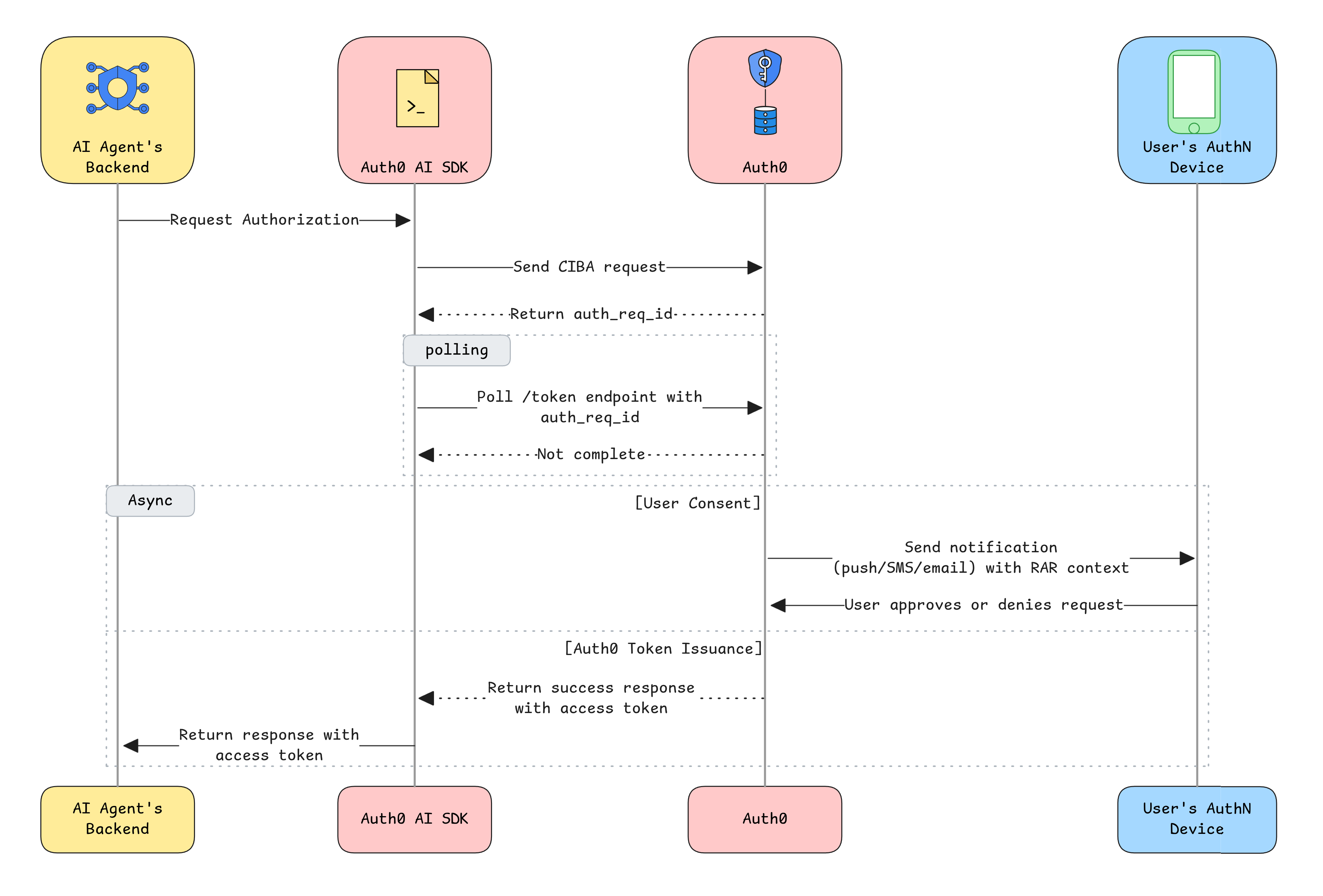

3. Keeping a human in the loop for critical actions

Some actions are too sensitive to be fully automated. Deleting a database, transferring funds, or sending a company-wide email are actions that demand explicit, real-time user consent.

For these scenarios, we can implement asynchronous authorization using Client-Initiated Backchannel Authentication (CIBA). CIBA flow is an OAuth2 flow that is meant for decoupled authentication. It is ideal for use cases where you need the user to authenticate asynchronously from their own device and get tokens in a different app/device. This makes it ideal for AI agents as well.

Here’s how it works:

- The AI agent wants to perform a critical action (e.g., "buy 100 shares of OKTA").

- It sends an authorization request to the authentication server.

- The server sends a push notification to the user's trusted device (e.g., mobile phone).

- The user must approve or deny the request directly on their device.

- The agent polls the server and only proceeds if it receives explicit approval.

This "human-in-the-loop" pattern provides a critical safety net, ensuring that the agent remains an assistant, not an unchecked decision-maker. Let's see an example of asynchronous authorization using Auth0:

export const withAsyncAuthorization = auth0AI.withAsyncAuthorization({ userID: async () => { const user = await getUser(); return user?.sub as string; }, bindingMessage: async ({ product, qty }) => `Do you want to buy ${qty} of ${product}`, scopes: ['openid', 'product:buy'], audience: process.env['AUDIENCE']!, onUnauthorized: async (e: Error) => { if (e instanceof AccessDeniedInterrupt) { return 'The user has denied the request'; } return e.message; }, }); export const shopOnlineTool = withAsyncAuthorization( tool({ description: 'Tool to buy products online', parameters: z.object({ product: z.string(), qty: z.number() }), execute: async ({ product, qty, priceLimit }) => { const credentials = getAsyncAuthorizationCredentials(); const accessToken = credentials?.accessToken; // Use access token to call first party APIs return `Ordering ${qty} ${product} with price limit ${priceLimit}`; }, }), );

Learn more and secure your AI agents

Excessive Agency is a serious threat, but it's one we can manage with the right security architecture. By adopting a Zero Trust mindset and leveraging modern identity protocols, we can build AI agents that are both powerful and safe. The key is to:

- Treat every tool call as a security checkpoint

- Enforce granular authorization

- Delegate identity with OAuth

- Require human consent for critical actions

Discover how Auth0 for AI Agents can address these challenges, enabling secure access to your tools, workflows, and users’ data. Get started today.

We invite you to explore these concepts further with our sample applications, like Assistant0 on GitHub.

About the author

Deepu K Sasidharan

Principal Developer Advocate